Infrared technology is making its way into an increasingly wide range of innovative consumer applications. The technology was first discovered in the early 19th century. However, it took quite some time to be able to actually use it and integrate it into marketable products. Today’s powerful infrared technology is being used in a variety of novel ways, adding value to advanced systems for autonomous vehicles and smart buildings, for example.

Infrared can be integrated into existing systems to add new technical capabilities. And, as production volumes increase, costs will continue to come down, making the technology even more accessible for an even wider range of uses.

Here are five things you need to know about infrared technology. Read on to learn how this advanced technology is bringing added value to a range of industries.

- The electromagnetic spectrum and the different wavelengths

How does the electromagnetic spectrum work?

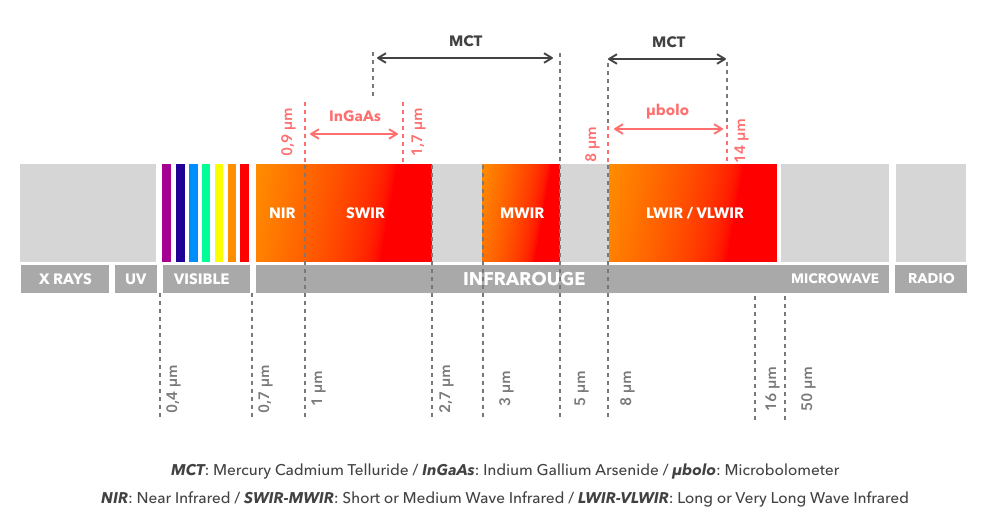

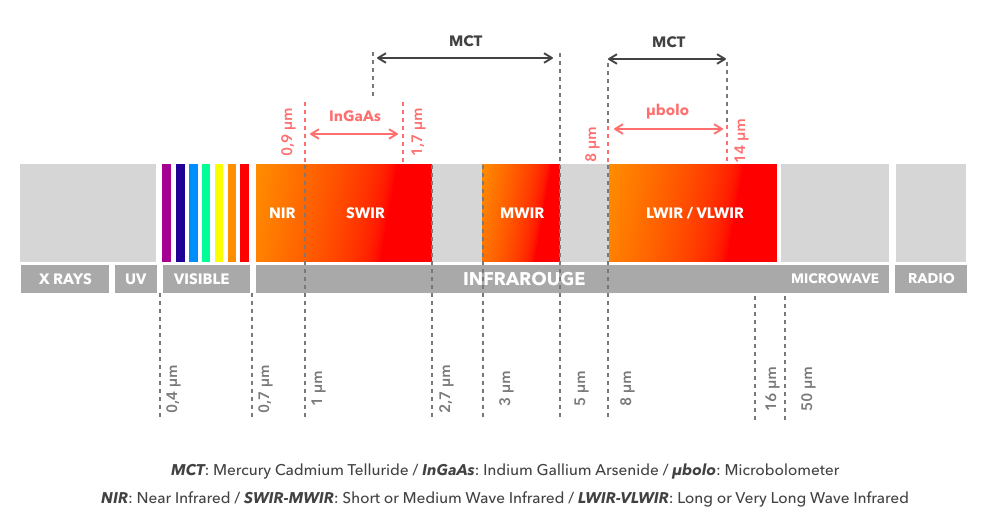

Radiation is characterized by its frequency and wavelength. And not all radiation is visible to the human eye. Infrared radiation has longer wavelengths than radiation in the visible spectrum and shorter wavelengths than microwave or terahertz radiation.

There are several wavelengths in the electromagnetic spectrum, and each one has unique characteristics.

NIR (near infrared): these are the shorter wavelengths in the infrared spectrum, and the closest to the visible spectrum at between 0.78 µm and 2.5 µm. The underlying principle of NIR spectroscopy, for example, is molecular vibration caused by the excitation of molecules by the infrared source. The molecules absorb infrared waves, changing the degree of vibration of the electrons. This creates a measurable signal.

SWIR (short wave infrared): The spectrum from 1 µm to 2.7 µm.Silicon-based detectors are limited to around 1.0 µm. For this reason, SWIR imaging requires optical and electronic components capable of operating over the 0.9 µm to 1.7 µm range, which is not the case for uncooled InGaAs detectors.

MWIR (medium wave infrared): The spectrum from 3 µm to 5 µm. Thermal imaging begins in this part of the spectrum, where temperature gradients present in the scene being observed start to form. MWIR detection requires cryogenically-cooled technologies like HgCdTe (MCT, or MerCad), a II-VI semiconductor material.

LWIR (long wave infrared): The spectrum from 7 µm to 14 µm.A detector captures the heat given off by objects in the scene being observed. Unlike visible-light detectors, which detect the light reflected off of objects, LWIR detectors do not need a light source. These detectors can generate identical images during the day or at night. The image will be the same regardless of the ambient light.

- The two main technologies

There are currently two main types of detectors:

- Cooled: These detectors are kept at an extremely low temperature using a cryogenic cooling system. This system lowers the the sensor temperature to cryogenic temperatures and reduces the heat-induced noise to a level lower than that of the signal emitted by the scene.

The primary advantages of this type of detector are incredibly high resolution and sensitivity and the resulting high image quality. However, cooled detectors are bulkier and more expensive than uncooled detectors. This makes them less suitable for certain applications where form factor is more important than image quality.

- Uncooled detectors or microbolometers: These detectors do not require a cooling system. With microbolometer technology, temperature differences in a scene trigger changes in the microbolometer’s temperature. These changes are then converted into electrical signals and then into images.Systems equipped with uncooled detectors are more cost effective and require less maintenance than systems with cooled detectors.

- NETD, the key indicator of detector sensitivity

NETD (noise-equivalent temperature difference) measures a camera’s thermal sensitivity. It is the smallest temperature difference a camera can detect. It is stated in milliKelvin (mK) or in degrees Celsius (° C). The lower the NETD, the better the camera will be at detecting thermal contrast. Therefore, NETD can be considered analogous to contrast in visible light detectors.

In infrared detectors, NETD can range between 25 mK and 100 mK for uncooled microbolometers. For cooled detectors it is around 10 mK.

NETD is particularly important for scenes with low thermal contrast (scenes where all of the objects are pretty much at the same temperature, such as landscapes, for example).

- Resolution and field of view (FOV)

Field of view (FOV) is how wide of an angle a camera can capture. FOV must be considered with image resolution (the number of pixels).

Resolution will indicate how sharp the image is, while FOV will show how wide it is. The higher the resolution (in other words, the more pixels you have), the sharper the image. However, to increase the number of pixels, you must reduce the FOV.

- Analog or digital

As its name suggests, an analog-to-digital converter (ADC) is a system that converts an analog signal into a digital (binary) signal. A digital-to-analog converter (DAC) converts a digital signal into an analog signal. In all-digital models, the ADC is integrated into the sensor. It converts the analog video signal into a digital signal that can be processed by software to extract the desired information from the scene. All-digital models can also include a polarization-switching DAC for the sensing element. Here, detector integrators no longer need to develop power components for the detectors, which makes them much easier to implement.

To learn more about the many ways that infrared technology can be used across the spectrum, download our white paper on “Understanding and Using Infrared Technology”