The advent of advanced driver assistance systems (ADAS) and autonomous vehicles (AV) is revolutionizing the way we travel and transport merchandise by road. They have the potential to make road travel easier and safer, reducing the number of accidents by eliminating human error and the ever-growing threat of being distracted at the wheel.

Sensors are progressing, but no single sensor makes driving safer. A suite of complementary sensors optimizes driving performance under any conditions by providing vital information and redundancy to guarantee safety at all times. A typical sensor suite includes a radar and one or more visible cameras. Other systems can also be included, such as LIDAR or infrared or thermal cameras.

Thermal imaging technology lends another dimension to these systems, but what role does it play?

ADAS or Advanced Driver Assistance Systems is an active driver safety system providing information or assistance to:

- Prevent dangerous situations that could lead to an accident,

- Take over tasks that could distract the driver,

- Help drivers perceive their surroundings (detecting overtaking, frost, pedestrians, etc.),

- Enable the vehicle to perceive risks and react faster than the driver’s reflexes could,

Advanced driver assistance systems and collision avoidance systems (Forward Collision Warning - FCM - and Active Emergency Braking - AEB) are important areas for development in the transport sector.

Any system that lessens and facilitates the driver’s role can be considered as a driver assistance system; these systems are categorized as levels 1 to 2:

- Level 0 is when there is no assistance.

- Level 1 corresponds to features like ACC (adaptive cruise control).

Systems classified between 0 and 1 are the most common and will remain so until the end of the decade.

- Level 2 concerns a combination of features that help lateral and longitudinal control.

Above level 3, we are getting into automated driving. Some legislation - such as French legislation - provides for vehicles with automated driving under certain conditions. In this scenario, a driver can delegate the driving of the vehicle, but must stay ready to take back control as soon as it is necessary.

The final stage in this evolution towards automated driving and automated cars is the entirely automated vehicle, or self-driving car, which corresponds to level 5. The driverless robotaxi is however still at the innovation stage with a unit cost of $0.5m to $1m.

ADAS will alert a driver to a danger and/or take steps to avoid an accident. Vehicles equipped with advanced driver assistance systems can detect their surroundings, process the information quickly and accurately in a computer system, and provide the driver with precise information.

Vehicles equipped with advanced driver assistance systems have a set of advanced sensors that reinforce a human driver’s perception and decision-making. The system makes use of all the available technologies: Visible cameras help detect objects in the vehicle’s trajectory, radar helps measure the distance and speed of moving obstacles, and LIDAR helps create an accurate 3D map of the car’s surroundings.

The ADAS system architecture is composed of a series of sensors, interfaces, and a powerful computer processor that integrates all the data and makes decisions in real time. These sensors are constantly monitoring the vehicle’s surroundings and send this information to embedded computers so that they can establish priorities and act accordingly.

- The benefits of thermal imaging for ADAS

Unfortunately, statistics show that 1.35 million people die around the world every year on the roads, 54% of whom are vulnerable road users (sources: NHTSA and WHO).

Moreover, 80% of accidents involving pedestrians in the US occur in bad light and weather conditions (source: NHTSA and WHO).

We have seen that current visual systems combine visible imagers and radar to provide information about the vehicle’s surroundings. However, certain factors limit the efficacy of these systems. If visibility is poor, obstacle detection and recognition distances become too low to keep the vehicle and its surroundings safe. Under these conditions, thermal imaging can complement existing systems by helping to detect and recognize an environment in even the most extreme conditions (night, fog, rain). Applying thermal imaging to vehicles therefore helps extend current sensors’ use cases and offers redundancy of information for safer decision-making. Thermal images are compatible with AI-based recognition and detection algorithms. The information acquired can be displayed for the driver to see or processed directly by the vehicle.

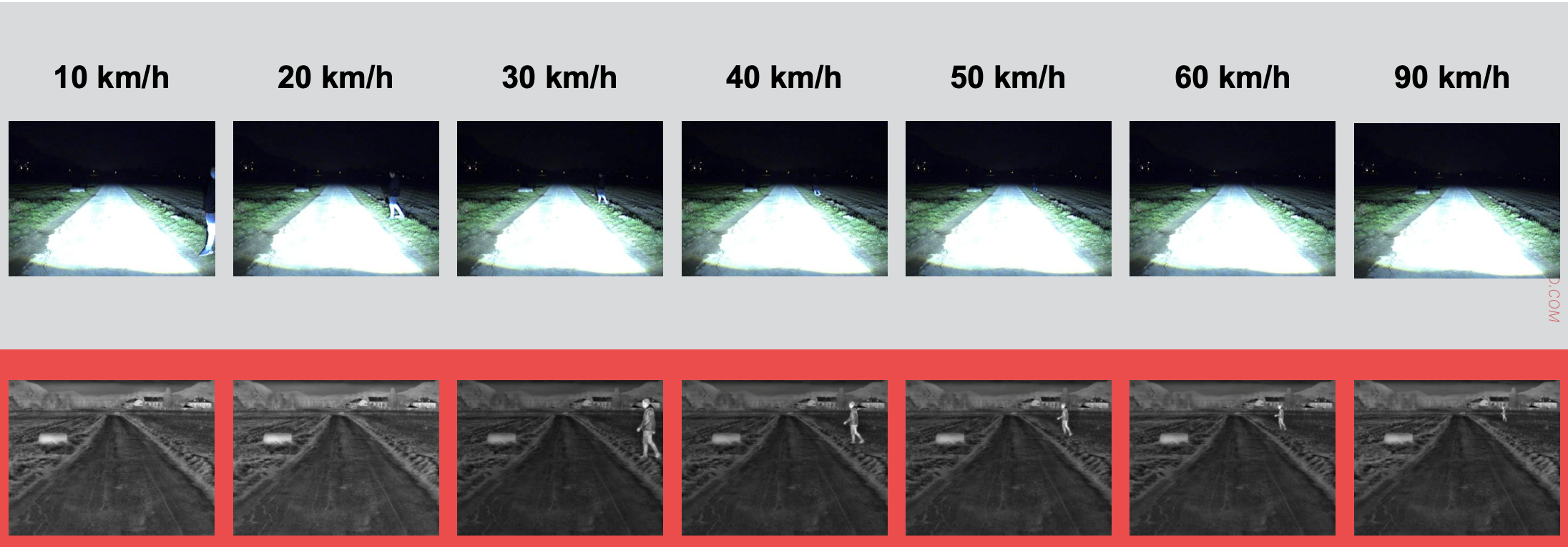

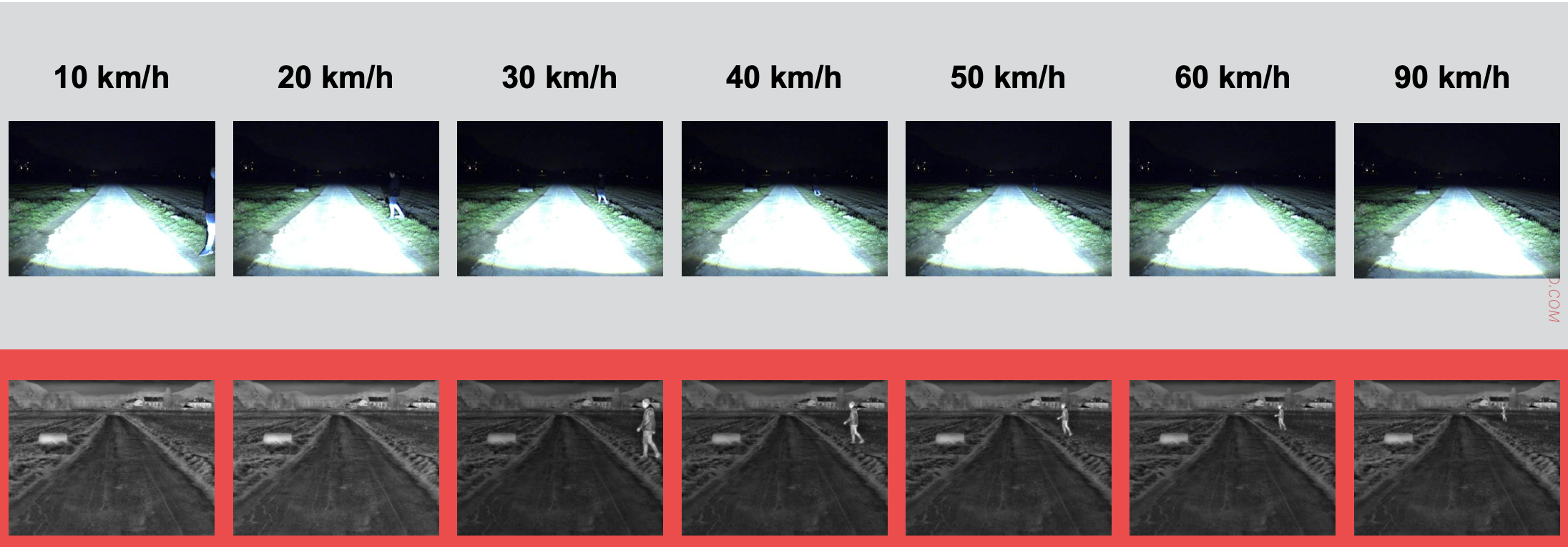

These images were taken on a country road with no lighting or moonlight, but in clear conditions. The pedestrian is positioned at stopping distance from the vehicle according to the speed shown.

Top: Vision with visible imagers

Bottom: Benefit of thermal imaging

Over 30 km/h with dimmed headlights, the pedestrians are not detected early enough by the visible camera.

With thermal imaging technology, you can see in any light conditions, day or night. It is not affected by glare from headlights or obscuring factors like smoke or fog.

It can improve the reliability of ADAS and autonomous vehicles. Combined with machine learning algorithms for categorizing objects, thermal imaging provides vital data from the far infrared part of the electromagnetic spectrum and helps improve vehicle decision-making in shared environments, where other sensors encounter difficulties such as darkness, shadows, glare from the sun, fog, smoke, and haze.

Thermal imaging can therefore be used wherever existing vision sensors may be lacking to detect vulnerable road users and save lives.

Download our free case study, which addresses the topic of autonomous emergency braking at night from a theoretical perspective.